A bipartisan group of lawmakers is pursuing legislation to prevent the Pentagon from using artificial intelligence (AI) to launch nuclear weapons.

The Block Nuclear Launch by Autonomous Artificial Intelligence Act aims to safeguard the nuclear command and control process from future policy changes that might allow AI to make nuclear launch and targeting decisions.

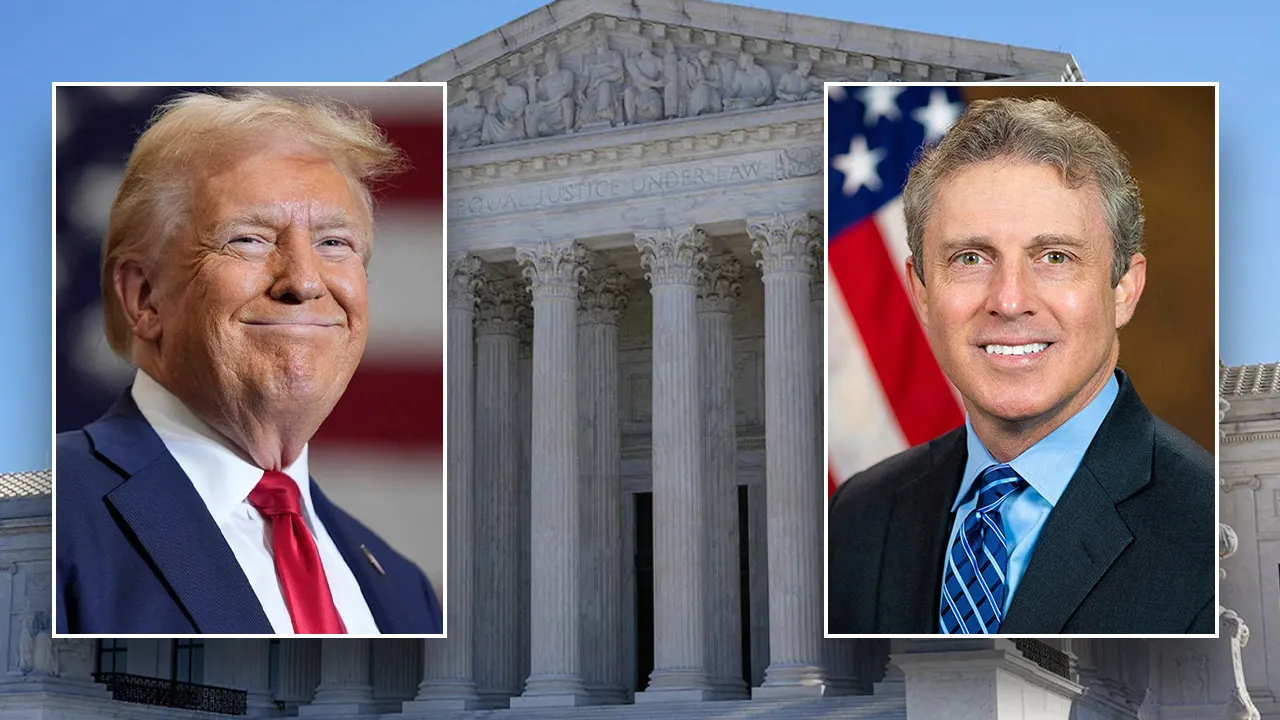

Reps. Ken Buck (R-Colo.), Don Beyer (D-Va.), Ted Lieu (D-Calif.), and Sen. Edward Markey (D-Mass.) introduced the legislation to both houses of Congress on April 26.

“While U.S. military use of AI can be appropriate for enhancing national security purposes, use of AI for deploying nuclear weapons without a human chain of command and control is reckless, dangerous, and should be prohibited,” Buck said in a prepared statement.

“I am proud to co-sponsor this legislation to ensure that human beings, not machines, have the final say over the most critical and sensitive military decisions.”

AI No Substitute for ‘Human Judgement’

The Pentagon’s 2022 Nuclear Posture Review maintains that its current policy is to “maintain a human ‘in the loop’ for all actions critical to informing and executing decisions by the President to initiate and terminate nuclear weapon employment.”

That policy appears informal, however, and does not apply to non-nuclear autonomous lethal systems that, despite popular misconception, are not prohibited by the Pentagon.

The bill would codify the Pentagon’s existing policy on AI and nuclear weapons by mandating that no federal funds could be used for the launch of any nuclear weapon by an automated system without meaningful human control.

Meaningful human control, according to the bill (pdf), would include human decision-making over the selection and engagement of nuclear targets, as well as the time, location, and manner of such use.

“AI technology is developing at an extremely rapid pace,” Lieu said. “While we all try to grapple with the pace at which AI is accelerating, the future of AI and its role in society remains unclear.

“AI can never be a substitute for human judgment when it comes to launching nuclear weapons.”

Risk of Nuclear Conflict With China Escalates

Since the Cold War, the United States has maintained a number of second-strike nuclear capabilities on what is called “launch-on-warning” status.

This means that the nation will launch nuclear weapons at a perceived nuclear aggressor upon detecting incoming missiles rather than waiting to verify that the nation has actually been struck by such an attack.

According to the Pentagon’s 2022 China Power Report (pdf), China is also implementing a launch-on-warning posture, called “early warning counterstrike,” where warning of a missile strike leads to a counterstrike before an enemy first strike can detonate, and has conducted exercises involving launch-on-warning nuclear responses since at least 2017.

Thus, should either nation allow AI to make decisions about nuclear launches, a false positive on nuclear tracking systems could quickly escalate into a full-blown nuclear war.

Such a scenario could also be exacerbated by other recent technological developments, including China’s development of technologies that could seize or otherwise interfere with U.S. satellite infrastructure, including missile targeting systems.

“As we live in an increasingly digital age, we need to ensure that humans hold the power alone to command, control, and launch nuclear weapons—not robots,” said Markey.

“We need to keep humans in the loop on making life or death decisions to use deadly force, especially for our most dangerous weapons.”