In what appears to be a world first, a government agency in New Zealand has banned employees from using AI technology over data and privacy concerns.

The New Zealand Ministry of Business, Innovation, and Employment (MBIE) issued the ban on tools like ChatGPT after concerns sensitive information inputted into such platforms could be later retrieved, reported RNZ.

AI-powered chatbots trawl through information online, which they will then process to “generate” a response based on whatever prompts it is given by a user. AI programs can now generate answers to questions, poetry, coding, and even musical composition.

However, due to data concerns, companies like Apple, Samsung, Amazon, JPMorgan Chase, Deutsche Bank, and Goldman Sachs have banned staff from using the tech.

Governments Looking to Regulate AI

Governments are also moving to establish some controls over how to use AI.

In Australia, the government has announced it will establish guardrails around AI’s development, and stated it does not recommend “their use for delivery of services or making decisions.”

Minister for Industry and Science Ed Husic said that despite AI now being a prevalent part of people’s lives, there was a demand for some sort of regulation from the community.

“The Albanese government wants to basically set up the next reforms that can give people confidence that we’re curbing the risks, maximising the benefits, and giving people that, as I said, that assurance that the technology is working for us and not the other way around,” Husic told ABC radio on June 1.

In New Zealand, the government’s chief digital officer at the Department of Internal Affairs was also working on guidance for agencies.

While in Canada, the country’s privacy watchdog has launched its own investigation into ChatGPT.

“AI technology and its effects on privacy is a priority for my office,” Privacy Commissioner Philippe Dufresne said in a media release in April.

“We need to keep up with—and stay ahead of—fast-moving technological advances, and that is one of my key focus areas as commissioner.”

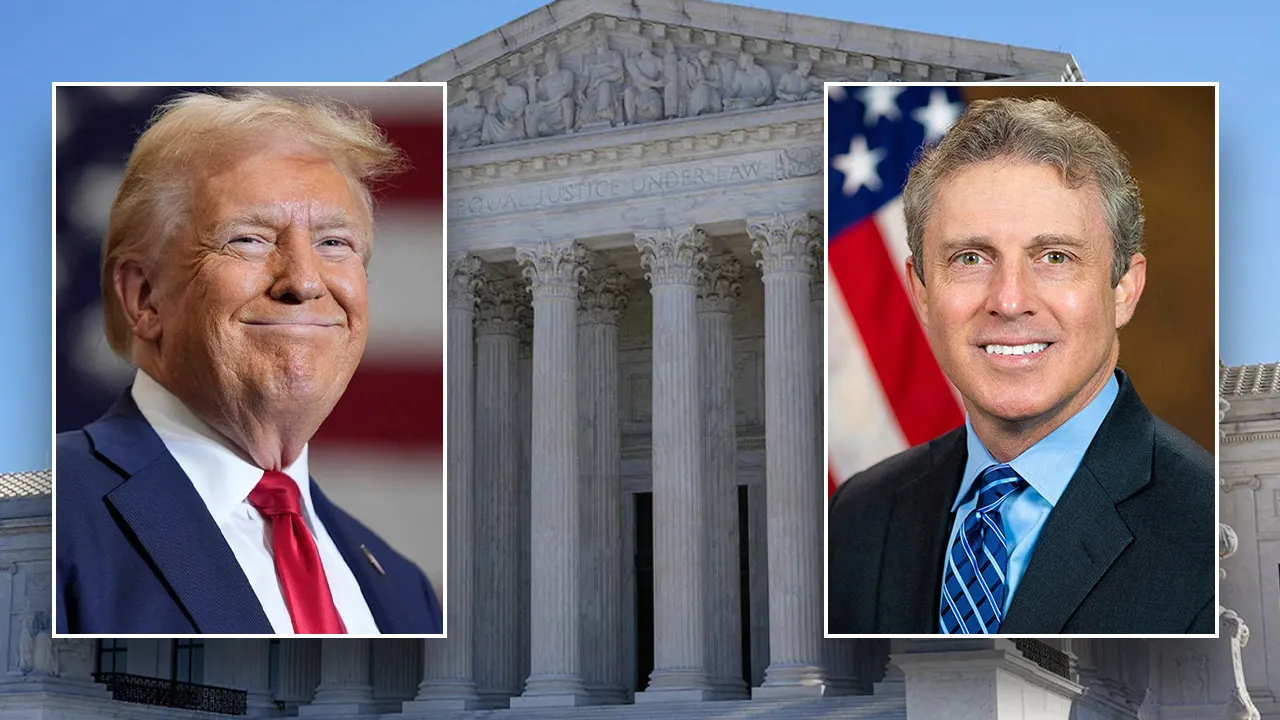

Meanwhile, in the United States, government officials are also concerned with Rep. Jay Obernolte (R-Calif.) telling The Epoch Times that AI has an “uncanny ability to pierce through personal digital privacy” that could evolve into surveillance.

“I worry about the way that AI can empower a nation-state to create, essentially, a surveillance state, which is what China is doing with it,” Obernolte said.

Google AI Raises Concerns Over Manipulation

The concerns come on the back of growing alarm at the ability of AI to manipulate humans following Google’s introduction of a “highly requested” AI search feature in its Gmail service.

The new application is intended to improve the users search experience for Google’s mobile users and is slated to help people find “exactly what you’re looking for.”

“When searching in Gmail, machine learning models will use the search term, most recent emails, and other relevant factors to show you the results that best match your search query. These results will now appear at the top of the list in a dedicated section, followed by all results sorted by recency.”

However, in a March 21 post on Twitter, author Kate Crawford raised concerns over the new AI after Google’s Bard AI tool told her where its dataset comes from and explained that one of the sources is Google’s internal data, including data from Gmail.

“I’m assuming that’s flat-out wrong; otherwise, Google is crossing some serious legal boundaries,” Crawford said at the time.

Google later replied on Twitter that the Bard platform was “not trained on Gmail data.”